Converting Geometries

Vectorization and Geometry Creation

The device integrates several elements:

- Triangle based geometries ( shells, meshes, some texts that may be rendered using triangles )

- Line based geometries ( polylines, polygon contours )

- Point based geometries ( points, lines ending points )

- Text based geometries ( MTEXT objects )

The device do not do anything by default for custom primitives that have to be manually integrated.

All geometries that are created here by the vectorization process are first isolated and are not linked to the camera. A GsMetafile object defined by the Teigha libraries is created for each object to render in the database. This is the ROD::OdShape class defined by the device.

This ROD::OdShape class stores a list of HOOPS Luminate shapes matching geometries that have been received for the metafile between calls to ExGsOpenGLVectorizeView::beginMetafile and ExGsOpenGLVectorizeView::endMetafile. Therefore, a single ROD::OdShape object may contain texts, points, lines and triangles objects.

The destruction of shapes is done automatically thanks to the smart pointer system of the Teigha libraries: the destruction of a ROD::OdShape instance cause all its shape to be destroyed as well, which in turn causes the destruction of the instance for each shape using HOOPS Luminate’s scene graph destruction system. Please refer to this page: Life Cycle of HOOPS Luminate Objects for all details on the scene graph life cycle.

Geometry Channels

Any geometrical shape in HOOPS Luminate may have several data channels for each vertex (see RED::IMeshShape or RED::ILineShape for instance). See the ExGsOpenGLVectorizeView::endMetafile call to figure out some of the data channels that are used in the scope of the device. Data channels are per vertex attributes of the geometry.

We may find the following data associated to a vertex:

- A position ( x, y, z ): 3 floats

- A normal ( nx, ny, nz ): 3 floats

- A set of UV coordinates ( u ) or ( u, v ): 1 or 2 floats - for line’s parametric length or optionally to display bitmap images with polygons

- A vertex color ( r, g, b, a ): 4 bytes. See the Color per material or color per vertex paragraph for details on this

- A custom depth ( z ): 1 float. See below for details on custom depth sorting of primitives

As you will see inside the ExGsOpenGLVectorizeView::endMetafile method, points, lines and triangle based shapes are very similar. The only difference that exist among them is the setup of the kind of primitive that is used for each: ( i0, i1, i2 ) are the three indices that are needed to define a triangle; ( i0, i1 ) define a line and ( i0 ) define a point. HOOPS Luminate always uses indexed datasets.

Primitive Batching

A key performance factor of this device implementation is in the batching system that is used for the k2dOptimized / k2DWireframe rendering modes. See the ROD::OdBatch class implementation for that.

HOOPS Luminate is using a real heavyweight scene graph architecture: in HOOPS Luminate, a shape object (a node or leaf in the scene graph) has several properties:

- Bounding spheres

- Layers (not to be compared with .DWG file layers, layers in HOOPS Luminate are for geometry visibility filtering, material configuration, etc…these are visual properties layers)

- Transforms

- Material properties

- Parent / children access links

- Custom user data

- Custom culling properties

- …

Consequently, the size of a single shape object in HOOPS Luminate is quite big, and allocation around 1 million shapes may consume more than 1 Gb of memory. This is not a problem in most CAD applications for which tessellated geometries consume much more than the cost of storing them - and for which you need all layers, and HOOPS Luminate scene graph properties - but this may be a problem in the .DWG world that can store lots of very small objects in drawings.

In the case of the device, we do not use transform matrices inheritance rules, materials are not inherited and we don’t need layers (the Gs vectorizer does the layer filtering before we reach the device level), so we can implement something better than the 1 metafile = 1 HOOPS Luminate shape model.

The ROD::OdBatch class associates one (int) value with one metafile. Uniquely. All contents of the metafile are batched in larger shapes, giving HOOPS Luminate its best rendering speed capability. Internally, HOOPS Luminate will re-batch all batches created this way looking for the optimal GPU memory layout.

The implementation of the ROD::OdBatch has been designed so that edition orders that are received are themselves batched another way: metafile destruction orders are collected, sorted and processed in a coherent manner so that performance remain good while editing the contents of the batch. See the ROD::OdBatch::Synchronize and ROD::OdBatch::FlushRemoveOrders methods for details on this process.

Adding Objects

So far, we do not see anything on screen because objects that have been created have no association with any camera. A HOOPS Luminate shape object must be added to a camera to be visible.

We face another complex part of the device architecture here: we have no idea at all of which object has to be seen by which view. We discover this during the second vectorization process for which ExGsOpenGLVectorizeDevice::playMetafile calls are issued rather than beginMetafile / endMetafile calls, thanks to the geometry cache that has been enabled.

At the time the playMetafile call occurs, in the context of the current view, we store the list of entities that correspond to this metafile in a list of visible entities for the view. This way, we build the instanced scene graph representing multiple views and setup the contents of each view.

The resolution of which entity is visible in which view is done by the ROD::OdBatch::Synchronize method.

A given entity visible several times is shared among different views. As entities are grouped by their similar properties, the whole batch is shared among different views. There’s a subtlety here as we have to insert intermediate nodes in the scene graph architecture to manage the floating origin used for the vectorization of a view’s private set of entities: each view uses a different floating origin matrix and we have to take this into consideration when an entity has been created in the context of a view and is seen in the context of another view.

Translating Lines

There are several code paths in line translation. The way a line is drawn depends on the context of it’s visualization:

- In modelspace, lines always have a constant width. We translate lines into HOOPS Luminate lines.

- In paperspace, lines that have a non zero thickness are zoomable. We translate them into polygons.

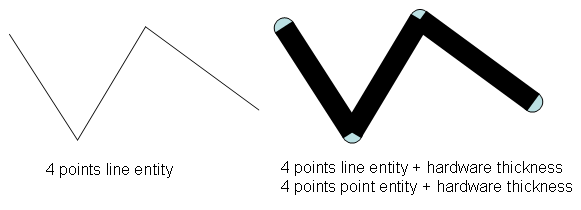

Lines in modelspace are translated into HOOPS Luminate lines. Thick lines receive a hardware thickness AND we add round ending points to the display, as illustrated by the picture below:

Thin and thick lines conversion in modelspace.

The thickness is managed at the hardware level. The setup occurs in the material associated to lines in ROD::OdMaterialId::ConvertToLineMaterial. Additionally, hardware thick points are added to the segments ending points to complete the rendering.

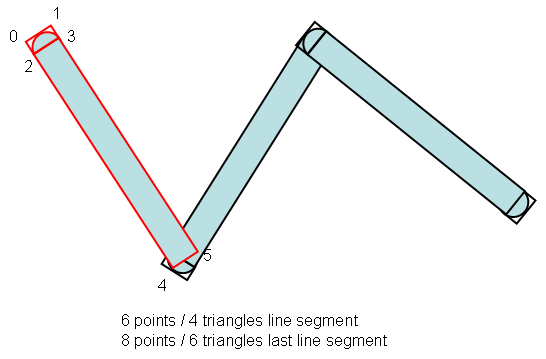

Lines in paperspace are translated into HOOPS Luminate polygons. Because these lines are zoomable, we can’t rely on the line primitive with a hardware thickness: the hardware thickness is constant and it’s not dynamically customizable. Therefore, we setup another kind of display, as detailed below:

Thick lines conversion in paperspace.

Every line segment is turned into a textured polygon with 4 triangles (for example, the first segment of the example polyline colored in red). The texture that defines the round end shape of the polygon is defined within ROD::OdMaterialId::ConvertToThickLineMaterial. UVs are chosen so that the round appear at one of the segment’s end. The last segment of the polyline is set with two round endings so that the line is completed.

This setup renders has several advantages:

- The quality of the visualized thick lines is good until very large zooms scales

- The rendering speed of these textured polygons is very good

A similar tutorial can be found in the HOOPS Luminate’s list of tutorials. Please look here for details: Rendering Any Line Thickness Using Quads.

Translating Fonts

Fonts are translated into a special HOOPS Luminate type of font (RED::FNT_TEXTURE) that cumulates several benefits:

- The rendering speed is very good

- The quality is good, while keeping a good capability to read the font while it’s seen very small on screen

- The font smoothly zooms in and out

The only disadvantage of this type of font is that it consumes video memory to store the font glyphs into textures. The amount of memory used can be controlled in the code in the ExGsOpenGLVectorizeView::textProc method, by setting lower values in the RED::IFont::SetGlyphTextureSize method.

The usage of textured fonts can be disabled if wished so, by reverting back to the classic OdGiGeometrySimplifier::textProc for all texts.

Color per Material or Color per Vertex?

The REDOdaDevice stores colors of objects as geometrical attributes rather than as material attributes. The rationale behind this is about memory and performance.

First, HOOPS Luminate materials are quite expensive: a HOOPS Luminate materials stores rendering configurations for each kind of graphic card, each layerset defined by the application and features several lists of shaders, each containing complete shading programs, parameters, etc…

Second, HOOPS Luminate primitive batching process is done per material. At the hardware level, we can’t render geometries that are using different materials without breaking the display pipeline to change the setup each time we change the material.

Then, the rendering of configurations such as a color wheel with hundreds of very simple primitives each using a different color can’t be done using one material for each color that exist in the model: This would be too costly in terms of memory and would break the performance.

Therefore, it sounds natural to store the color as a geometry attribute rather than as a material attribute. This is not really expensive in terms of memory as the 4 extra bytes required to store a color on a vertex are in contiguous memory blocks. In terms of performance, the resulting speedup is good: nearly all objects that share material properties as they are described in the ROD::OdMaterialId are drawn as a single batch.

Draw Order with Custom Z Sorting

Rendering order is another complex part inside the device. The point here is that, we discover what we have to do on the fly, along with the ExGsOpenGLVectorizeView::playMetafile calls that we receive for each view.

The rendering order of objects is natural for all 3D models that are using a lighting model based on shading: in this case, the z-buffer define which object is visible for each pixel on screen. No problem here.

If we consider color based materials, then the rendering order is no longer defined by any natural z-buffer sorting, but rather by the flow of playMetafile calls that we receive during the vectorization process of the view. Here comes the problem.

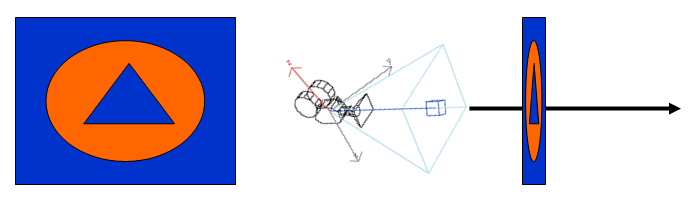

To understand the difference between the retained mode HOOPS Luminate API and an immediate mode style API like the Teigha vectorization system, let’s consider the following example:

Illustrating the z sorting problem.

Our simple scene is made of a blue rectangle, an orange disk and a blue triangle. All these primitives are defined on the same plane, making any z-sorting effort completely useless.

If we render this scene through the vectorization process, we’ll receive three ExGsOpenGLVectorizeView::playMetafile calls:

- playMetafile( blue rectangle )

- playMetafile( orange disk )

- playMetafile( blue triangle )

This defines the correct back-to-front rendering order needed for our small scene. If we render the same scene with HOOPS Luminate, blue objects will be drawn first and then orange objects will be drawn (HOOPS Luminate is using a per material rendering system). Because the z-buffer has no effect here, we won’t get the correct picture.

Solving the Z Sorting Issue with HOOPS Luminate

HOOPS Luminate has several mechanisms to customize the rendering order of drawn geometries:

- Camera lists are prioritized; they can be rendered back to front and they can share their z-buffer space or not (see Viewpoint Render Lists under section Contents of a VRL).

- Materials can be assigned priorities, so that we can get a exact control of the rendering order of all primitives that are using a material vs. all primitives that are using another material (see

RED::IMaterial::SetPriority).- Geometries that are rendered with the same material are rendered in their creation order.

- Finally, the z-buffer defines what’s visible for each screen pixel when enabled (see Hardware vs. Software Rendering under section A Word on Scanline and Z-Buffer Rendering).

All these mechanisms are based on a categorization of primitives (a grouping by material, by scene order or any other semantic sorting system). In the Teigha architecture, we don’t have such information available. We only know that we have to render a metafile submitted by ExGsOpenGLVectorizeView::playMetafile before the one coming after it.

We capture the draw order information during the vectorization process:

- We add an extra geometry channel to all objects that are rendered in k2dOptimized or k2dWireframe more. It’s stored in the

RED::MCL_USER0data channel and is one single float that contains a single depth value in [ 0.0f, 1.0f ].- All shaders write this single floating point depth value as the final shader z output, rather than the z value resulting of the geometry rendering.

- For each object that is created, we change this depth value, making it a little bit closer to the camera than the depth of the previous object ( we start at 1.0f and get closer to the camera at 0.0f ).

This solves the rendering order issue, while keeping the best display performances.