Writing a Custom Rendering Shader in ARB

This tutorial is an example of custom render shader creation writing vertex and pixel programs in ARB. It is divided into three steps:

- Writing an ambient shader

- Writing a phong shader

- Writing a phong shader using a diffuse texture

Writing an ARB Shader with HOOPS Luminate

Loading Shader

There’s no difference between loading an ARB shader program and a GLSL program: the RED::IResourceManager::LoadShaderFromString method must be used for both kind of programs.

The engine automatically detects the kind of shader it receives, based on the standard headers that all versions of ARB shaders must enforce (!!ARBfp1.0 for a GL_fragment_program_ARB compatible program for example). A shader that doesn’t start with an ARB header is considered as being a GLSL shader.

Here is the loading of our ambient shader:

// b. A vertex shader in ARB:

RC_TEST( AmbientVertexShaderProgram( program ) );

RC_TEST( iresmgr->LoadShaderFromString( shaderID, program ) );

RC_TEST( ambient.SetVertexProgramId( shaderID, RED_L0, resmgr ) );

// c. A pixel shader in ARB:

RC_TEST( AmbientPixelShaderProgram( program ) );

RC_TEST( iresmgr->LoadShaderFromString( shaderID, program ) );

RC_TEST( ambient.SetPixelProgramId( shaderID, RED_L0, resmgr ) );

‘AmbientVertexShaderProgram’ and ‘AmbientPixelShaderProgram’ are tutorial functions which simply builds the RED::ShaderString of our ambient shader.

Binding Vertex Shader Inputs

Vertex shader inputs are bound using the RED::RenderCode mechanism. Consequently, we provide no mechanism to use named input shader attributes. The following table shows the correspondence between RED::RenderCode channels and standard ARB vertex shader inputs:

| Vertex channel | ARB inputs |

|---|---|

RED_VSH_VERTEX |

vertex.attrib[0] |

RED_VSH_NORMAL |

vertex.attrib[2] |

RED_VSH_COLOR |

vertex.attrib[3] |

RED_VSH_TEX0 to RED_VSH_TEX7 |

vertex.attrib[8] to vertex.attrib[15] |

The ambient shader only needs vertex position:

// a. Geometrical shader input:

RED::RenderCode rcode;

rcode.BindChannel( RED_VSH_VERTEX, RED::MCL_VERTEX );

RC_TEST( ambient.SetRenderCode( rcode, RED_L0 ) );

Binding Parameters

ARB locals are bound using regular RED::RenderShaderParameter instances, added as parameters to the considered shader. The corresponding types are (‘n’ is the binding position):

| Parameter Type | GLSL Type |

|---|---|

RED::RenderShaderParameter::VECTOR |

program.local[n]; ( x, y, z, w ) |

RED::RenderShaderParameter::VECTOR3 |

program.local[n]; ( x, y, z, 1.0 ) |

RED::RenderShaderParameter::COLOR |

program.local[n]; ( r, g, b, a ) |

RED::RenderShaderParameter::FLOAT |

program.local[n]; ( f, f, f, f ) |

RED::RenderShaderParameter::BOOL |

program.local[n]; 1.0 = true; 0.0 = false; ( b, b, b, b ) |

RED::RenderShaderParameter::TEXTURE |

texture[n] |

RED::RenderShaderParameter::MATRIX |

program.local[n] to program.local[n+3] |

For instance, the parameters sent to the ambient shaders are:

// d. Some shader parameters:

RED::RenderShaderParameter light_ambient( "light_ambient", 0, RED::RenderShaderParameter::PSH );

light_ambient.SetReference( RED::RenderShaderParameter::REF_LIGHT_AMBIENT );

RC_TEST( ambient.AddParameter( light_ambient, RED_L0 ) );

RED::RenderShaderParameter object_color( "object_color", 1, RED::RenderShaderParameter::PSH );

object_color.SetValue( g_torus_color );

RC_TEST( ambient.AddParameter( object_color, RED_L0 ) );

Writing ARB Ambient Shader

The ambient shader is one of the simplest render shader. It only applies the object color modulated by the ambient light color.

The vertex shader only does its primary task: transforming the vertex position:

shader.VertexShaderStart();

shader.VertexTransform( "result.position", "state.matrix.program[2]", "vertex.attrib[0]" );

shader.ShaderEnd();

The pixel shader outputs the color:

shader.PixelShaderStart();

shader.Add( "MUL result.color, program.local[0], program.local[1];\n" );

shader.Add( "MOV result.color.a, {1.0}.x;\n" );

shader.ShaderEnd();

We can see in this code sample that the two RED::RenderShaderParameter defined previously are available in the ARB program as program.local.

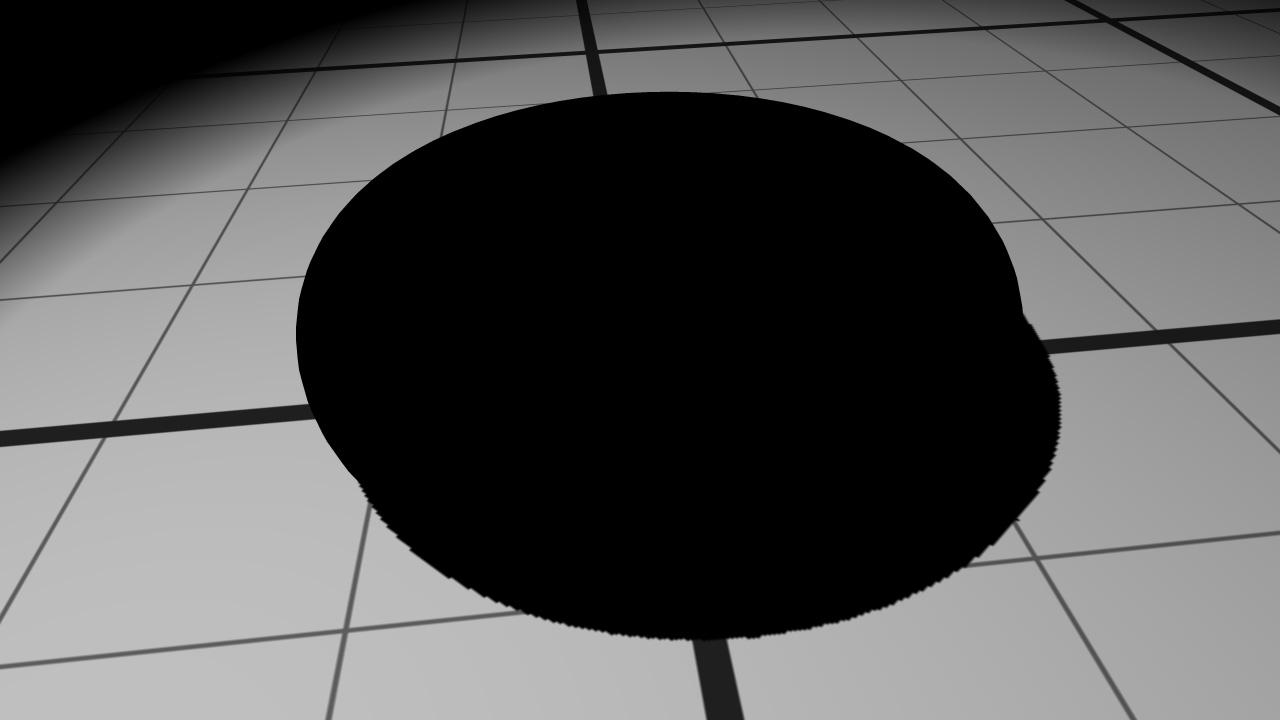

The material after ambient shading

The object appears black despite its color because there is no ambient light color in our scene.

A Word on Matrix Transforms

For the purpose of this example we have used the modelview-projection matrix accessible by default to ARB programs as the ‘state.matrix.mvp’ string. This matrix has a high definition equivalent value accessible in ‘state.matrix.program[2]’, as detailed by RED::RenderCode::SetModelViewProjectionMatrix. The matrix bound by the RED::RenderCode is suitable to solve floating origin issues (see Floating Origins) unlike the default OpenGL matrix.

The same matrix also exists for the modelview (RED::RenderCode::SetModelViewMatrix) or for the view matrix (RED::RenderCode::SetViewMatrix).

Writing ARB Phong Shader

In a second step, a Phong shader is created and added to the object material. Phong shading will add diffuse and specular colors to the existing ambient one. The Phong lighting calculation is done in the pixel shader because it needs interpolated normals and positions.

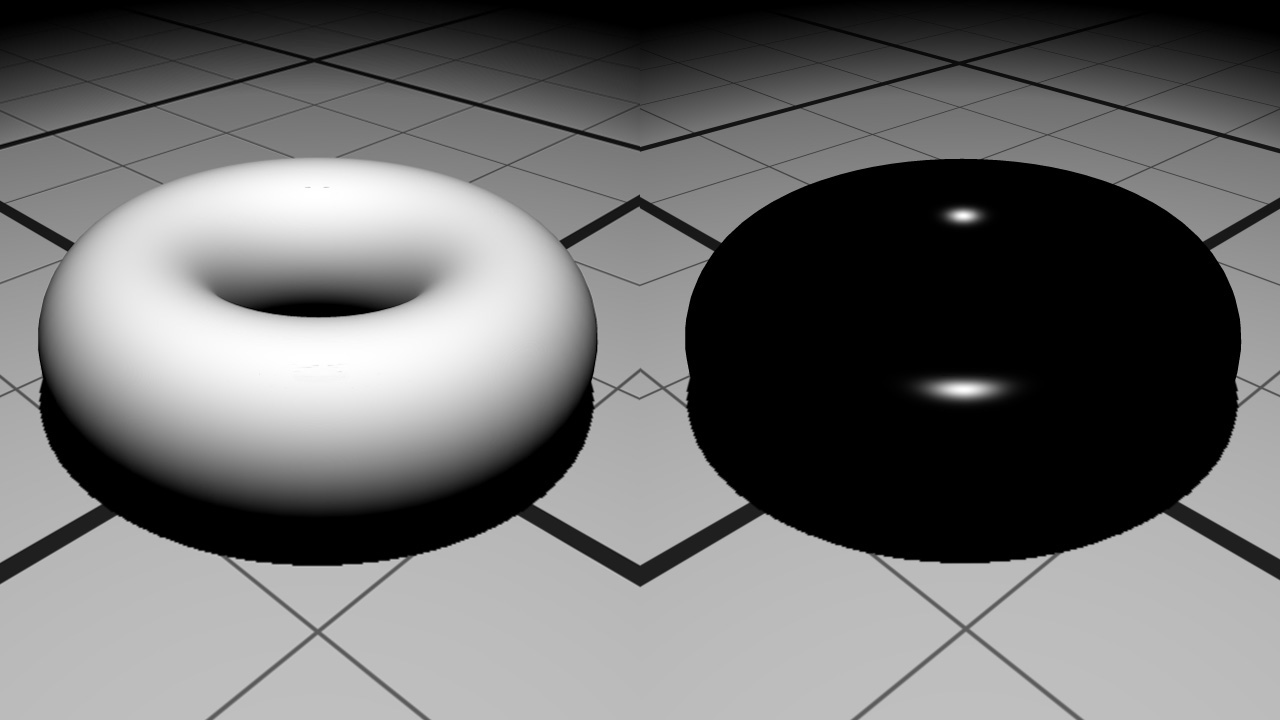

Diffuse color on the left and specular color on the right

The positions and normals have to be transmitted from the geometry to the pixel shader. First the RED::RenderCode will bind them:

// a. Geometrical shader input:

RED::RenderCode rcode;

rcode.BindChannel( RED_VSH_VERTEX, RED::MCL_VERTEX );

rcode.BindChannel( RED_VSH_NORMAL, RED::MCL_NORMAL );

rcode.SetModelMatrix( true );

RC_TEST( phong.SetRenderCode( rcode, RED_LS ) );

In the vertex shader, they are accessed through the ‘vertex.attrib[0]’ and ‘vertex.attrib[3]’ ARB variables and sent to the next pipeline stage using the ‘result.texcoord’ array:

shader.VertexShaderStart();

// Output regular fragment position using high definition modelview-projection matrix:

shader.VertexTransform( "result.position", "state.matrix.program[2]", "vertex.attrib[0]" );

// Transform position from object space to world space

// and transfer it to the next stage:

// state.matrix.program[1] contains the world matrix.

shader.VertexTransform( "result.texcoord[0]", "state.matrix.program[1]", "vertex.attrib[0]" );

// Transform normal from object space to world space

// and transfer it to the next stage:

shader.VertexTransform( "result.texcoord[1]", "state.matrix.program[1].invtrans", "vertex.attrib[2]" );

shader.ShaderEnd();

The data are transformed from object space to world space. To do this, the program needs the world matrix. It is transmitted through the ‘state.matrix.program[1]’ variable because we called the RED::RenderCode::SetModelMatrix function during the shader creation phase.

The pixel shader contains all the phong shading calculations. It receives the interpolated normals and positions thanks to its ‘fragment.texcoord’ array and several parameters as ‘program.local’.

shader.PixelShaderStart();

// Get parameters:

shader.Param( "lightPos", 0 );

shader.Param( "lightColor", 1 );

shader.Param( "objectColor", 2 );

shader.Param( "eyePos", 3 );

// light = normalize( lightPos - in.position )

shader.Temp( "light" );

shader.Add( "SUB light, lightPos, fragment.texcoord[0];\n" );

shader.Normalize( "light", "light" );

// normal = normalize( in.normal )

shader.Temp( "normal" );

shader.Normalize( "normal", "fragment.texcoord[1]" );

// eye = normalize( eyePos - in.position )

shader.Temp( "eye" );

shader.Add( "SUB eye, eyePos, fragment.texcoord[0];\n" );

shader.Normalize( "eye", "eye" );

// dot = clamp( dot( normal, light ), 0.0, 1.0 )

shader.Temp( "dot" );

shader.Add( "DP3 dot.x, normal, light;\n" );

shader.Add( "MAX dot.x, dot.x, {0.0}.x;\n" );

shader.Add( "MIN dot.x, dot.x, {1.0}.x;\n" );

// reflect = 2.0 * dot * normal - light;

shader.Temp( "reflect" );

shader.Add( "MUL reflect, dot.x, {2.0}.x;\n" );

shader.Add( "MUL reflect, reflect, normal;\n" );

shader.Add( "SUB reflect, reflect, light;\n" );

// diffuse = dot;

shader.Temp( "diffuse" );

shader.Add( "MOV diffuse.x, dot.x;\n" );

// dot = clamp( dot( reflect, eye ), 0.0, 1.0 )

shader.Add( "DP3 dot.x, reflect, eye;\n" );

shader.Add( "MAX dot.x, dot.x, {0.0}.x;\n" );

shader.Add( "MIN dot.x, dot.x, {1.0}.x;\n" );

// specular = pow( dot, 60.0 )

shader.Temp( "specular" );

shader.Add( "POW specular.x, dot.x, {60}.x;\n" );

// color = objectColor * lightColor * ( diffuse + specular )

shader.Temp( "color" );

shader.Add( "MUL color, objectColor, lightColor;\n" );

shader.Add( "ADD diffuse.x, diffuse.x, specular.x;\n" );

shader.Add( "MUL color, color, diffuse.x;\n" );

// shadow = texture sample

shader.Temp( "shadow" );

shader.Add( "TEX shadow, fragment.position, texture[0], RECT;\n" );

// result.color = color * shadow

shader.Add( "MUL result.color, color, shadow;\n" );

shader.ShaderEnd();

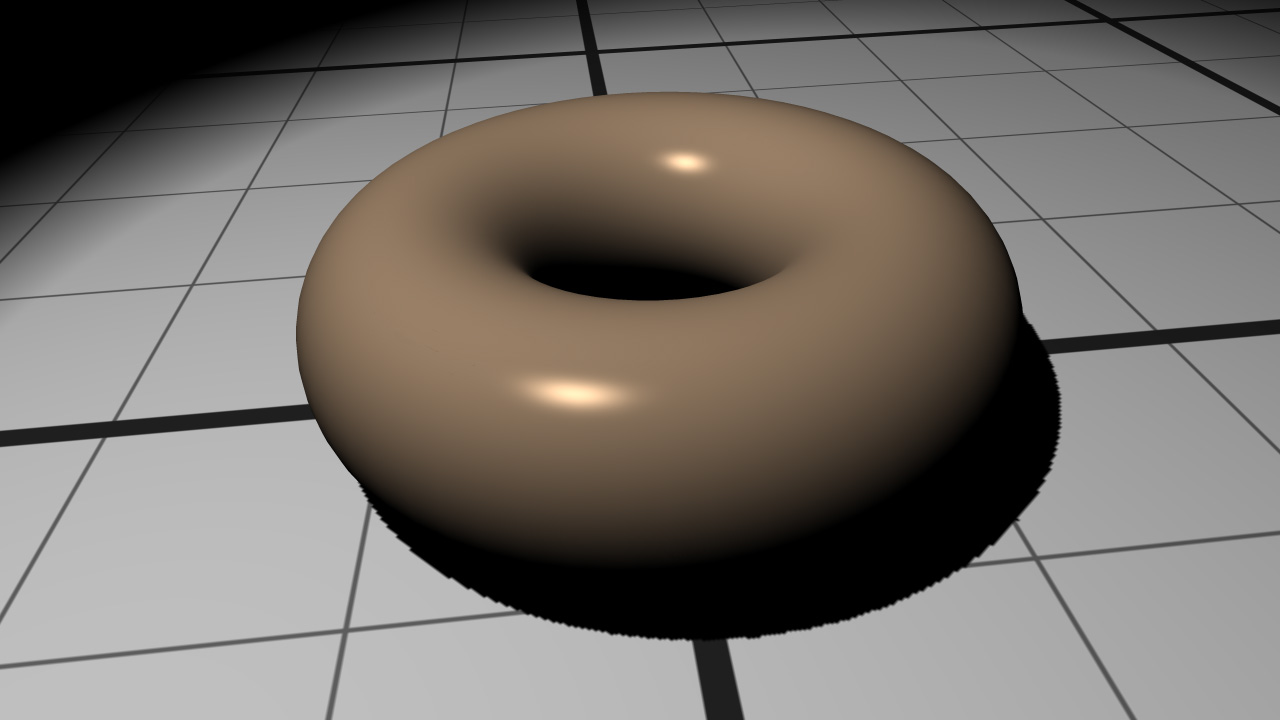

The material after Phong shading

Writing ARB Textured Phong Shader

During the last step of the tutorial, we will see how to replace the object color by a texture image. To correctly apply a texture on an object, a shader program needs texture coordinates. Our object has texture coordinates contained in the RED::MCL_TEX0 geometry channel.

Note

In case your object does not have texture coordinates, HOOPS Luminate provides a useful function to build them: RED::IMeshShape::BuildTextureCoordinates.

Like the vertex and normal channels, the texture coordinates channel needs to be bound in the RED::RenderCode object:

rcode.BindChannel( RED_VSH_TEX0, RED::MCL_TEX0 );

The data is then retrieved in the vertex shader using the ‘vertex.attrib[8]’ variable and transmitted to the pixel shader in ‘result.texcoord[2]’:

// Transfer the texture coordinates to the next stage:

shader.Add( "MOV result.texcoord[2], vertex.attrib[8];\n" );

The pixel shader gets the texture image using the ‘texture[1]’ parameter and samples it using the texture coordinates get with ‘fragment.texcoord[2]’:

// texCoord = in.texCoord * textureScale:

shader.Temp( "texCoord" );

shader.Add( "MUL texCoord, fragment.texcoord[2], textureScale.x;\n" );

// objectColor = texture sample

shader.Temp( "objectColor" );

shader.Add( "TEX objectColor, texCoord, texture[1], 2D;\n" );

A scale is also transmitted as a parameter to adjust the size of the texture on the object.

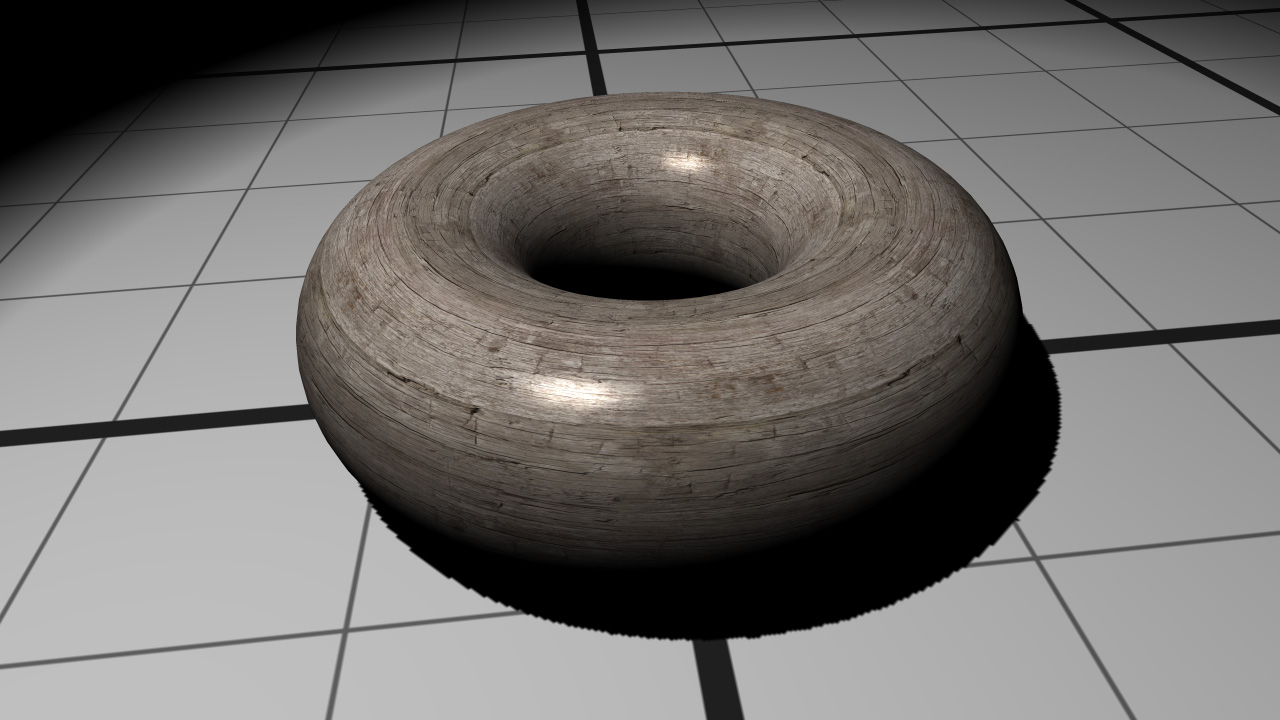

The material after textured Phong shading